After answering so many different questions about how to use various parts and components of the “Kinect v2 with MS-SDK”-package, I think it would be easier, if I share some general tips, tricks and examples. I’m going to add more tips and tricks to this article in time. Feel free to drop by, from time to time, to check out what’s new.

After answering so many different questions about how to use various parts and components of the “Kinect v2 with MS-SDK”-package, I think it would be easier, if I share some general tips, tricks and examples. I’m going to add more tips and tricks to this article in time. Feel free to drop by, from time to time, to check out what’s new.

And here is a link to the Online documentation of the K2-asset.

Table of Contents:

What is the purpose of all manages in the KinectScripts-folder

How to use the Kinect v2-Package functionality in your own Unity project

How to use your own model with the AvatarController

How to make the avatar hands twist around the bone

How to utilize Kinect to interact with GUI buttons and components

How to get the depth- or color-camera textures

How to get the position of a body joint

How to make a game object rotate as the user

How to make a game object follow user’s head position and rotation

How to get the face-points’ coordinates

How to mix Kinect-captured movement with Mecanim animation

How to add your models to the FittingRoom-demo

How to set up the sensor height and angle

Are there any events, when a user is detected or lost

How to process discrete gestures like swipes and poses like hand-raises

How to process continuous gestures, like ZoomIn, ZoomOut and Wheel

How to utilize visual (VGB) gestures in the K2-asset

How to change the language or grammar for speech recognition

How to run the fitting-room or overlay demo in portrait mode

How to build an exe from ‘Kinect-v2 with MS-SDK’ project

How to make the Kinect-v2 package work with Kinect-v1

What do the options of ‘Compute user map’-setting mean

How to set up the user detection order

How to enable body-blending in the FittingRoom-demo, or disable it to increase FPS

How to build Windows-Store (UWP-8.1) application

How to work with multiple users

How to use the FacetrackingManager

How to add background image to the FittingRoom-demo

How to move the FPS-avatars of positionally tracked users in VR environment

How to create your own gestures

How to enable or disable the tracking of inferred joints

How to build exe with the Kinect-v2 plugins provided by Microsoft

How to build Windows-Store (UWP-10) application

How to run the projector-demo scene

How to render background and the background-removal image on the scene background

How to run the demo scenes on non-Windows platforms

How to workaround the user tracking issue, when the user is turned back

How to get the full scene depth image as texture

Some useful hints regarding AvatarController and AvatarScaler

How to setup the K2-package to work with Orbbec Astra sensors (deprecated)

How to setup the K2-asset to work with Nuitrack body tracking SDK

How to control Keijiro’s Skinner-avatars with the Avatar-Controller component

How to track a ball hitting a wall (hints)

How to create your own programmatic gestures

What is the file-format used by the KinectRecorderPlayer-component (KinectRecorderDemo)

How to enable user gender and age detection in KinectFittingRoom1-demo scene

What is the purpose of all manages in the KinectScripts-folder:

The managers in the KinectScripts-folder are components. You can utilize them in your projects, depending on the features you need. The KinectManager is the most general component, needed to interact with the sensor and to get basic data from it, like the color and depth streams, and the bodies and joints’ positions in meters, in Kinect space. The purpose of the AvatarController is to transfer the detected joint positions and orientations to a rigged skeleton. The CubemanController is similar, but it works with transforms and lines to represent the joints and bones, in order to make locating the tracking issues easier. The FacetrackingManager deals with the face points and head/neck orientation. It is used internally by the KinectManager (if available at the same time) to get the precise position and orientation of the head and neck. The InteractionManager is used to control the hand cursor and to detect hand grips, releases and clicks. And finally, the SpeechManager is used for recognition of speech commands. Pay also attention to the Samples-folder. It contains several simple examples (some of them cited below) you can learn from, use directly or copy parts of the code into your scripts.

How to use the Kinect v2-Package functionality in your own Unity project:

1. Copy folder ‘KinectScripts’ from the Assets/K2Examples-folder of the package to your project. This folder contains the package scripts, filters and interfaces.

2. Copy folder ‘Resources’ from the Assets/K2Examples-folder of the package to your project. This folder contains all needed libraries and resources. You can skip copying the libraries you don’t plan to use, in order to save space.

3. Copy folder ‘Standard Assets’ from the Assets/K2Examples-folder of the package to your project. It contains the wrapper classes for Kinect-v2 SDK.

4. Wait until Unity detects and compiles the newly copied resources, folders and scripts.

See this tip as well, if you like to build your project with the Kinect-v2 plugins provided by Microsoft.

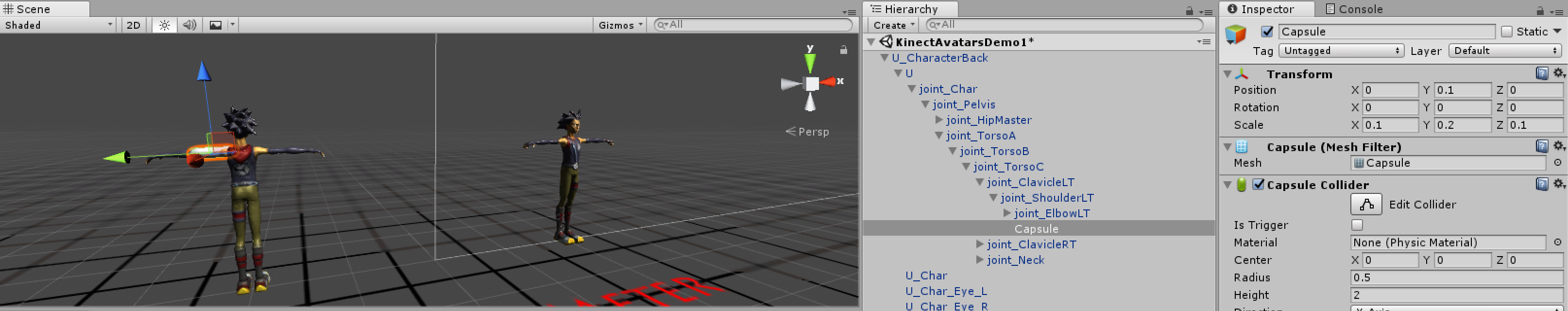

How to use your own model with the AvatarController:

1. (Optional) Make sure your model is in T-pose. This is the zero-pose of Kinect joint orientations.

2. Select the model-asset in Assets-folder. Select the Rig-tab in Inspector window.

3. Set the AnimationType to ‘Humanoid’ and AvatarDefinition – to ‘Create from this model’.

4. Press the Apply-button. Then press the Configure-button to make sure the joints are correctly assigned. After that exit the configuration window.

5. Put the model into the scene.

6. Add the KinectScript/AvatarController-script as component to the model’s game object in the scene.

7. Make sure your model also has Animator-component, it is enabled and its Avatar-setting is set correctly.

8. Enable or disable (as needed) the MirroredMovement and VerticalMovement-settings of the AvatarController-component. Do mind when mirrored movement is enabled, the model’s transform should have Y-rotation of 180 degrees.

9. Run the scene to test the avatar model. If needed, tweak some settings of AvatarController and try again.

How to make the avatar hands twist around the bone:

To do it, you need to set ‘Allowed Hand Rotations’-setting of the KinectManager to ‘All’. KinectManager is a component of the MainCamera in the example scenes. This setting has three options: None – turns off all hand rotations, Default – turns on the hand rotations, except the twists around the bone, All – turns on all hand rotations.

How to utilize Kinect to interact with GUI buttons and components:

1. Add the InteractionManager to the main camera or to other persistent object in the scene. It is used to control the hand cursor and to detect hand grips, releases and clicks. Grip means closed hand with thumb over the other fingers, Release – opened hand, hand Click is generated when the user’s hand doesn’t move (stays still) for about 2 seconds.

2. Enable the ‘Control Mouse Cursor’-setting of the InteractionManager-component. This setting transfers the position and clicks of the hand cursor to the mouse cursor, this way enabling interaction with the GUI buttons, toggles and other components.

3. If you need drag-and-drop functionality for interaction with the GUI, enable the ‘Control Mouse Drag’-setting of the InteractionManager-component. This setting starts mouse dragging, as soon as it detects hand grip and continues the dragging until hand release is detected. If you enable this setting, you can also click on GUI buttons with a hand grip, instead of the usual hand click (i.e. staying in place, over the button, for about 2 seconds).

How to get the depth- or color-camera textures:

First off, make sure that ‘Compute User Map’-setting of the KinectManager-component is enabled, if you need the depth texture, or ‘Compute Color Map’-setting of the KinectManager-component is enabled, if you need the color camera texture. Then write something like this in the Update()-method of your script:

KinectManager manager = KinectManager.Instance;

if(manager && manager.IsInitialized())

{

Texture2D depthTexture = manager.GetUsersLblTex();

Texture2D colorTexture = manager.GetUsersClrTex();

// do something with the textures

}

How to get the position of a body joint:

This is demonstrated in KinectScripts/Samples/GetJointPositionDemo-script. You can add it as a component to a game object in your scene to see it in action. Just select the needed joint and optionally enable saving to a csv-file. Do not forget that to add the KinectManager as component to a game object in your scene. It is usually a component of the MainCamera in the example scenes. Here is the main part of the demo-script that retrieves the position of the selected joint:

KinectInterop.JointType joint = KinectInterop.JointType.HandRight;

KinectManager manager = KinectManager.Instance;

if(manager && manager.IsInitialized())

{

if(manager.IsUserDetected())

{

long userId = manager.GetPrimaryUserID();

if(manager.IsJointTracked(userId, (int)joint))

{

Vector3 jointPos = manager.GetJointPosition(userId, (int)joint);

// do something with the joint position

}

}

}

How to make a game object rotate as the user:

This is similar to the previous example and is demonstrated in KinectScripts/Samples/FollowUserRotation-script. To see it in action, you can create a cube in your scene and add the script as a component to it. Do not forget to add the KinectManager as component to a game object in your scene. It is usually a component of the MainCamera in the example scenes.

How to make a game object follow user’s head position and rotation:

You need the KinectManager and FacetrackingManager added as components to a game object in your scene. For example, they are components of the MainCamera in the KinectAvatarsDemo-scene. Then, to get the position of the head and orientation of the neck, you need code like this in your script:

KinectManager manager = KinectManager.Instance;

if(manager && manager.IsInitialized())

{

if(manager.IsUserDetected())

{

long userId = manager.GetPrimaryUserID();

if(manager.IsJointTracked(userId, (int)KinectInterop.JointType.Head))

{

Vector3 headPosition = manager.GetJointPosition(userId, (int)KinectInterop.JointType.Head);

Quaternion neckRotation = manager.GetJointOrientation(userId, (int)KinectInterop.JointType.Neck);

// do something with the head position and neck orientation

}

}

}

How to get the face-points’ coordinates:

You need a reference to the respective FaceFrameResult-object. This is demonstrated in KinectScripts/Samples/GetFacePointsDemo-script. You can add it as a component to a game object in your scene, to see it in action. To get a face point coordinates in your script you need to invoke its public GetFacePoint()-function. Do not forget to add the KinectManager and FacetrackingManager as components to a game object in your scene. For example, they are components of the MainCamera in the KinectAvatarsDemo-scene.

How to mix Kinect-captured movement with Mecanim animation

1. Use the AvatarControllerClassic instead of AvatarController-component. Assign only these joints that have to be animated by the sensor.

2. Set the SmoothFactor-setting of AvatarControllerClassic to 0, to apply the detected bone orientations instantly.

3. Create an avatar-body-mask and apply it to the Mecanim animation layer. In this mask, disable Mecanim animations of the Kinect-animated joints mentioned above. Do not disable the root-joint!

4. Enable the ‘Late Update Avatars’-setting of KinectManager (component of MainCamera in the example scenes).

5. Run the scene to check the setup. When a player gets recognized by the sensor, part of his joints will be animated by the AvatarControllerClassic component, and the other part – by the Animator component.

How to add your models to the FittingRoom-demo

1. For each of your fbx-models, import the model and select it in the Assets-view in Unity editor.

2. Select the Rig-tab in Inspector. Set the AnimationType to ‘Humanoid’ and the AvatarDefinition to ‘Create from this model’.

3. Press the Apply-button. Then press the Configure-button to check if all required joints are correctly assigned. The clothing models usually don’t use all joints, which can make the avatar definition invalid. In this case you can assign manually the missing joints (shown in red).

4. Keep in mind: The joint positions in the model must match the structure of the Kinect-joints. You can see them, for instance in the KinectOverlayDemo2. Otherwise the model may not overlay the user’s body properly.

5. Create a sub-folder for your model category (Shirts, Pants, Skirts, etc.) in the FittingRoomDemo/Resources-folder.

6. Create a sub-folders with subsequent numbers (0000, 0001, 0002, etc.) for all imported in p.1 models, in the model category folder.

7. Move your models into these numerical folders, one model per folder, along with the needed materials and textures. Rename the model’s fbx-file to ‘model.fbx’.

8. You can put a preview image for each model in jpeg-format (100 x 143px, 24bpp) in the respective model folder. Then rename it to ‘preview.jpg.bytes’. If you don’t put a preview image, the fitting-room demo will display ‘No preview’ in the model-selection menu.

9. Open the FittingRoomDemo1-scene.

10. Add a ModelSelector-component for each model category to the KinectController game object. Set its ‘Model category’-setting to be the same as the name of sub-folder created in p.5 above. Set the ‘Number of models’-setting to reflect the number of sub-folders created in p.6 above.

11. The other settings of your ModelSelector-component must be similar to the existing ModelSelector in the demo. I.e. ‘Model relative to camera’ must be set to ‘BackgroundCamera’, ‘Foreground camera’ must be set to ‘MainCamera’, ‘Continuous scaling’ – enabled. The scale-factor settings may be set initially to 1 and the ‘Vertical offset’-setting to 0. Later you can adjust them slightly to provide the best model-to-body overlay.

12. Enable the ‘Keep selected model’-setting of the ModelSelector-component, if you want the selected model to continue overlaying user’s body, after the model category changes. This is useful, if there are several categories (i.e. ModelSelectors), for instance for shirts, pants, skirts, etc. In this case the selected shirt model will still overlay user’s body, when the category changes and the user starts selects pants, for instance.

13. The CategorySelector-component provides gesture control for changing models and categories, and takes care of switching model categories (e.g for shirts, pants, ties, etc.) for the same user. There is already a CategorySelector for the 1st user (player-index 0) in the scene, so you don’t need to add more.

14. If you plan for multi-user fitting-room, add one CategorySelector-component for each other user. You may also need to add the respective ModelSelector-components for model categories that will be used by these users, too.

15. Run the scene to ensure that your models can be selected in the list and they overlay the user’s body correctly. Experiment a bit if needed, to find the values of scale-factors and vertical-offset settings that provide the best model-to-body overlay.

16. If you want to turn off the cursor interaction in the scene, disable the InteractionManager-component of KinectController-game object. If you want to turn off the gestures (swipes for changing models & hand raises for changing categories), disable the respective settings of the CategorySelector-component. If you want to turn off or change the T-pose calibration, change the ‘Player calibration pose’-setting of KinectManager-component.

17. You can use the FittingRoomDemo2 scene, to utilize or experiment with a single overlay model. Adjust the scale-factor settings of AvatarScaler to fine tune the scale of the whole body, arm- or leg-bones of the model, if needed. Enable the ‘Continuous Scaling’ setting, if you want the model to rescale on each Update.

18. If the clothing/overlay model uses the Standard shader, set its ‘Rendering mode’ to ‘Cutout’. See this comment below for more information.

How to set up the sensor height and angle

There are two very important settings of the KinectManager-component that influence the calculation of users’ and joints’ space coordinates, hence almost all user-related visualizations in the demo scenes. Here is how to set them correctly:

1. Set the ‘Sensor height’-setting, as to how high above the ground is the sensor, in meters. The by-default value is 1, i.e. 1.0 meter above the ground, which may not be your case.

2. Set the ‘Sensor angle’-setting, as to the tilt angle of the sensor, in degrees. Use positive degrees if the sensor is tilted up, negative degrees – if it is tilted down. The by-default value is 0, which means 0 degrees, i.e. the sensor is not tilted at all.

3. Because it is not so easy to estimate the sensor angle manually, you can use the ‘Auto height angle’-setting to find out this value. Select ‘Show info only’-option and run the demo-scene. Then stand in front of the sensor. The information on screen will show you the rough height and angle-settings, as estimated by the sensor itself. Repeat this 2-3 times and write down the values you see.

4. Finally, set the ‘Sensor height’ and ‘Sensor angle’ to the estimated values you find best. Set the ‘Auto height angle’-setting back to ‘Dont use’.

5. If you find the height and angle values estimated by the sensor good enough, or if your sensor setup is not fixed, you can set the ‘Auto height angle’-setting to ‘Auto update’. It will update the ‘Sensor height’ and ‘Sensor angle’-settings continuously, when there are users in the field of view of the sensor.

Are there any events, when a user is detected or lost

There are no special event handlers for user-detected/user-lost events, but there are two other options you can use:

1. In the Update()-method of your script, invoke the GetUsersCount()-function of KinectManager and compare the returned value to a previously saved value, like this:

KinectManager manager = KinectManager.Instance;

if(manager && manager.IsInitialized())

{

int usersNow = manager.GetUsersCount();

if(usersNow > usersSaved)

{

// new user detected

}

if(usersNow < usersSaved)

{

// user lost

}

usersSaved = usersNow;

}

2. Create a class that implements KinectGestures.GestureListenerInterface and add it as component to a game object in the scene. It has methods UserDetected() and UserLost(), which you can use as user-event handlers. The other methods could be left empty or return the default value (true). See the SimpleGestureListener or GestureListener-classes, if you need an example.

How to process discrete gestures like swipes and poses like hand-raises

Most of the gestures, like SwipeLeft, SwipeRight, Jump, Squat, etc. are discrete. All poses, like RaiseLeftHand, RaiseRightHand, etc. are also considered as discrete gestures. This means these gestures may report progress or not, but all of them get completed or cancelled at the end. Processing these gestures in a gesture-listener script is relatively easy. You need to do as follows:

1. In the UserDetected()-function of the script add the following line for each gesture you need to track:

manager.DetectGesture(userId, KinectGestures.Gestures.xxxxx);

2. In GestureCompleted() add code to process the discrete gesture, like this:

if(gesture == KinectGestures.Gestures.xxxxx)

{

// gesture is detected - process it (for instance, set a flag or execute an action)

}

3. In the GestureCancelled()-function, add code to process the cancellation of the continuous gesture:

if(gesture == KinectGestures.Gestures.xxxxx)

{

// gesture is cancelled - process it (for instance, clear the flag)

}

If you need code samples, see the SimpleGestureListener.cs or CubeGestureListener.cs-scripts.

4. From v2.8 on, KinectGestures.cs is not any more a static class, but a component that may be extended, for instance with the detection of new gestures or poses. You need to add it as component to the KinectController-game object, if you need gesture or pose detection in the scene.

How to process continuous gestures, like ZoomIn, ZoomOut and Wheel

Some of the gestures, like ZoomIn, ZoomOut and Wheel, are continuous. This means these gestures never get fully completed, but only report progress greater than 50%, as long as the gesture is detected. To process them in a gesture-listener script, do as follows:

1. In the UserDetected()-function of the script add the following line for each gesture you need to track:

manager.DetectGesture(userId, KinectGestures.Gestures.xxxxx);

2. In GestureInProgress() add code to process the continuous gesture, like this:

if(gesture == KinectGestures.Gestures.xxxxx)

{

if(progress > 0.5f)

{

// gesture is detected - process it (for instance, set a flag, get zoom factor or angle)

}

else

{

// gesture is no more detected - process it (for instance, clear the flag)

}

}

3. In the GestureCancelled()-function, add code to process the end of the continuous gesture:

if(gesture == KinectGestures.Gestures.xxxxx)

{

// gesture is cancelled - process it (for instance, clear the flag)

}

If you need code samples, see the SimpleGestureListener.cs or ModelGestureListener.cs-scripts.

4. From v2.8 on, KinectGestures.cs is not any more a static class, but a component that may be extended, for instance with the detection of new gestures or poses. You need to add it as component to the KinectController-game object, if you need gesture or pose detection in the scene.

How to utilize visual (VGB) gestures in the K2-asset

The visual gestures, created by the Visual Gesture Builder (VGB) can be used in the K2-asset, too. To do it, follow these steps (and see the VisualGestures-game object and its components in the KinectGesturesDemo-scene):

1. Copy the gestures’ database (xxxxx.gbd) to the Resources-folder and rename it to ‘xxxxx.gbd.bytes’.

2. Add the VisualGestureManager-script as a component to a game object in the scene (see VisualGestures-game object).

3. Set the ‘Gesture Database’-setting of VisualGestureManager-component to the name of the gestures’ database, used in step 1 (‘xxxxx.gbd’).

4. Create a visual-gesture-listener to process the gestures, and add it as a component to a game object in the scene (see the SimpleVisualGestureListener-script).

5. In the GestureInProgress()-function of the gesture-listener add code to process the detected continuous gestures and in the GestureCompleted() add code to process the detected discrete gestures.

How to change the language or grammar for speech recognition

1. Make sure you have installed the needed language pack from here.

2. Set the ‘Language code’-setting of SpeechManager-component, as to the grammar language you need to use. The list of language codes can be found here (see ‘LCID Decimal’).

3. Make sure the ‘Grammar file name’-setting of SpeechManager-component corresponds to the name of the grxml.txt-file in Assets/Resources.

4. Open the grxml.txt-grammar file in Assets/Resources and set its ‘xml:lang’-attribute to the language that corresponds to the language code in step 2.

5. Make the other needed modifications in the grammar file and save it.

6. (Optional since v2.7) Delete the grxml-file with the same name in the root-folder of your Unity project (the parent folder of Assets-folder).

7. Run the scene to check, if speech recognition works correctly.

How to run the fitting-room or overlay demo in portrait mode

1. First off, add 9:16 (or 3:4) aspect-ratio to the Game view’s list of resolutions, if it is missing.

2. Select the 9:16 (or 3:4) aspect ratio of Game view, to set the main-camera output in portrait mode.

3. Open the fitting-room or overlay-demo scene and select each of the BackgroundImage(X)-game object(s). If it has a child object called RawImage, select this sub-object instead.

4. Enable the PortraitBackground-component of each of the selected BackgroundImage object(s). When finished, save the scene.

5. Run the scene and test it in portrait mode.

How to build an exe from ‘Kinect-v2 with MS-SDK’ project

By default Unity builds the exe (and the respective xxx_Data-folder) in the root folder of your Unity project. It is recommended to you use another, empty folder instead. The reason is that building the exe in the folder of your Unity project may cause conflicts between the native libraries used by the editor and the ones used by the exe, if they have different architectures (for instance the editor is 64-bit, but the exe is 32-bit).

Also, before building the exe, make sure you’ve copied the Assets/Resources-folder from the K2-asset to your Unity project. It contains the needed native libraries and custom shaders. Optionally you can remove the unneeded zip.bytes-files from the Resources-folder. This will save a lot of space in the build. For instance, if you target Kinect-v2 only, you can remove the Kinect-v1 and OpenNi2-related zipped libraries. The exe won’t need them anyway.

How to make the Kinect-v2 package work with Kinect-v1

If you have only Kinect v2 SDK or Kinect v1 SDK installed on your machine, the KinectManager should detect the installed SDK and sensor correctly. But in case you have both Kinect SDK 2.0 and SDK 1.8 installed simultaneously, the KinectManager will put preference on Kinect v2 SDK and your Kinect v1 will not be detected. The reason for this is that you can use SDK 2.0 in offline mode as well, i.e. without sensor attached. In this case you can emulate the sensor by playing recorded files in Kinect Studio 2.0.

If you want to make the KinectManager utilize the appropriate interface, depending on the currently attached sensor, open KinectScripts/Interfaces/Kinect2Interface.cs and at its start change the value of ‘sensorAlwaysAvailable’ from ‘true’ to ‘false’. After this, close and reopen the Unity editor. Then, on each start, the KinectManager will try to detect which sensor is currently attached to your machine and use the respective sensor interface. This way you could switch the sensors (Kinect v2 or v1), as to your preference, but will not be able to use the offline mode for Kinect v2. To utilize the Kinect v2 offline mode again, you need to switch ‘sensorAlwaysAvailable’ back to true.

What do the options of ‘Compute user map’-setting mean

Here are one-line descriptions of the available options:

- RawUserDepth means that only the raw depth image values, coming from the sensor will be available, via the GetRawDepthMap()-function for instance;

- BodyTexture means that GetUsersLblTex()-function will return the white image of the tracked users;

- UserTexture will cause GetUsersLblTex() to return the tracked users’ histogram image;

- CutOutTexture, combined with enabled ‘Compute color map‘-setting, means that GetUsersLblTex() will return the cut-out image of the users.

All these options (except RawUserDepth) can be tested instantly, if you enable the ‘Display user map‘-setting of KinectManager-component, too.

How to set up the user detection order

There is a ‘User detection order’-setting of the KinectManager-component. You can use it to determine how the user detection should be done, depending on your requirements. Here are short descriptions of the available options:

- Appearance is selected by default. It means that the player indices are assigned in order of user appearance. The first detected user gets player index 0, The next one gets index 1, etc. If the user 0 gets lost, the remaining users are not reordered. The next newly detected user will take its place;

- Distance means that player indices are assigned depending on distance of the detected users to the sensor. The closest one will get player index 0, the next closest one – index 1, etc. If a user gets lost, the player indices are reordered, depending on the distances to the remaining users;

- Left to right means that player indices are assigned depending on the X-position of the detected users. The leftmost one will get player index 0, the next leftmost one – index 1, etc. If a user gets lost, the player indices are reordered, depending on the X-positions of the remaining users;

The user-detection area can be further limited with ‘Min user distance’, ‘Max user distance’ and ‘Max left right distance’-settings, in meters from the sensor. The maximum number of detected user can be limited by lowering the value of ‘Max tracked user’-setting.

How enable body-blending in the FittingRoom-demo, or disable it to increase FPS

If you select the MainCamera in the KinectFittingRoom1-demo scene (in v2.10 or above), you will see a component called UserBodyBlender. It is responsible for mixing the clothing model (overlaying the user) with the real world objects (including user’s body parts), depending on the distance to camera. For instance, if you arms or other real-world objects are in front of the model, you will see them overlaying the model, as expected.

You can enable the component, to turn on the user’s body-blending functionality. The ‘Depth threshold’-setting may be used to adjust the minimum distance to the front of model (in meters). It determines when a real-world’s object will become visible. It is set by default to 0.1m, but you could experiment a bit to see, if any other value works better for your models. If the scene performance (in means of FPS) is not sufficient, and body-blending is not important, you can disable the UserBodyBlender-component to increase performance.

How to build Windows-Store (UWP-8.1) application

To do it, you need at least v2.10.1 of the K2-asset. To build for ‘Windows store’, first select ‘Windows store’ as platform in ‘Build settings’, and press the ‘Switch platform’-button. Then do as follows:

1. Unzip Assets/Plugins-Metro.zip. This will create Assets/Plugins-Metro-folder.

2. Delete the KinectScripts/SharpZipLib-folder.

3. Optionally, delete all zip.bytes-files in Assets/Resources. You won’t need these libraries in Windows/Store. All Kinect-v2 libraries reside in Plugins-Metro-folder.

4. Select ‘File / Build Settings’ from the menu. Add the scenes you want to build. Select ‘Windows Store’ as platform. Select ‘8.1’ as target SDK. Then click the Build-button. Select an empty folder for the Windows-store project and wait the build to complete.

5. Go to the build-folder and open the generated solution (.sln-file) with Visual studio.

6. Change the ‘by default’ ARM-processor target to ‘x86’. The Kinect sensor is not compatible with ARM processors.

7. Right click ‘References’ in the Project-windows and select ‘Add reference’. Select ‘Extensions’ and then WindowsPreview.Kinect and Microsoft.Kinect.Face libraries. Then press OK.

8. Open solution’s manifest-file ‘Package.appxmanifest’, go to ‘Capabilities’-tab and enable ‘Microphone’ and ‘Webcam’ in the left panel. Save the manifest. This is needed to to enable the sensor, when the UWP app starts up. Thanks to Yanis Lukes (aka Pendrokar) for providing this info!

9. Build the project. Run it, to test it locally. Don’t forget to turn on Windows developer mode on your machine.

How to work with multiple users

Kinect-v2 can fully track up to 6 users simultaneously. That’s why many of the Kinect-related components, like AvatarController, InteractionManager, model & category-selectors, gesture & interaction listeners, etc. have a setting called ‘Player index’. If set to 0, the respective component will track the 1st detected user. If set to 1, the component will track the 2nd detected use. If set to 2 – the 3rd user, etc. The order of user detection may be specified with the ‘User detection order’-setting of the KinectManager (component of KinectController game object).

How to use the FacetrackingManager

The FacetrackingManager-component may be used for several purposes. First, adding it as component of KinectController will provide more precise neck and head tracking, when there are avatars in the scene (humanoid models utilizing the AvatarController-component). If HD face tracking is needed, you can enable the ‘Get face model data’-setting of FacetrackingManager-component. Keep in mind that using HD face tracking will lower performance and may cause memory leaks, which can cause Unity crash after multiple scene restarts. Please use this feature carefully.

In case of ‘Get face model data’ enabled, don’t forget to assign a mesh object (e.g. Quad) to the ‘Face model mesh’-setting. Pay also attention to the ‘Textured model mesh’-setting. The available options are: ‘None’ – means the mesh will not be textured; ‘Color map’ – the mesh will get its texture from the color camera image, i.e. it will reproduce user’s face; ‘Face rectangle’ – the face mesh will be textured with its material’s Albedo texture, whereas the UI coordinates will match the detected face rectangle.

Finally, you can use the FacetrackingManager public API to get a lot of face-tracking data, like the user’s head position and rotation, animation units, shape units, face model vertices, etc.

How to add background image to the FittingRoom-demo (updated for v2.14 and later)

To replace the color-camera background in the FittingRoom-scene with a background image of your choice, please do as follows:

1. Enable the BackgroundRemovalManager-component of the KinectController-game object in the scene.

2. Make sure the ‘Compute user map’-setting of KinectManager (component of the KinectController, too) is set to ‘Body texture’, and the ‘Compute color map’-setting is enabled.

3. Set the needed background image as texture of the RawImage-component of BackgroundImage1-game object in the scene.

4. Run the scene to check, if it works as expected.

How to move the FPS-avatars of positionally tracked users in VR environment

There are two options for moving first-person avatars in VR-environment (the 1st avatar-demo scene in K2VR-asset):

1. If you use the Kinect’s positional tracking, turn off the Oculus/Vive positional tracking, because their coordinates are different to Kinect’s.

2. If you prefer to use the Oculus/Vive positional tracking:

– enable the ‘External root motion’-setting of the AvatarController-component of avatar’s game object. This will disable avatar motion as to Kinect special coordinates.

– enable the HeadMover-component of avatar’s game object, and assign the MainCamera as ‘Target transform’, to follow the Oculus/Vive position.

Now try to run the scene. If there are issues with the MainCamera used as positional target, do as follows:

– add an empty game object to the scene. It will be used to follow the Oculus/Vive positions.

– assign the newly created game object to the ‘Target transform’-setting of the HeadMover-component.

– add a script to the newly created game object, and in that script’s Update()-function set programatically the object’s transform position to be the current Oculus/Vive position.

How to create your own gestures

For gesture recognition there are two options – visual gestures (created with the Visual Gesture Builder, part of Kinect SDK 2.0) and programmatic gestures, coded in KinectGestures.cs or a class that extends it. The programmatic gestures detection consists mainly of tracking the position and movement of specific joints, relative to some other joints. For more info regarding how to create your own programmatic gestures look at this tip below.

The scenes demonstrating the detection of programmatic gestures are located in the KinectDemos/GesturesDemo-folder. The KinectGesturesDemo1-scene shows how to utilize discrete gestures, and the KinectGesturesDemo2-scene is about continuous gestures.

And here is a video on creating and checking for visual gestures. Please check KinectDemos/GesturesDemo/VisualGesturesDemo-scene too, to see how to use visual gestures in Unity. A major issue with the visual gestures is that they usually work in the 32-bit builds only.

How to enable or disable the tracking of inferred joints

First, keep in mind that:

1. There is ‘Ignore inferred joints’-setting of the KinectManager. KinectManager is usually a component of the KinectController-game object in demo scenes.

2. There is a public API method of KinectManager, called IsJointTracked(). This method is utilized by various scripts & components in the demo scenes.

Here is how it works:

The Kinect SDK tracks the positions of all body joints’ together with their respective tracking states. These states can be Tracked, NotTracked or Inferred. When the ‘Ignore inferred joints’-setting is enabled, the IsJointTracked()-method returns true, when the tracking state is Tracked or Inferred, and false when the state is NotTracked. I.e. both tracked and inferred joints are considered valid. When the setting is disabled, the IsJointTracked()-method returns true, when the tracking state is Tracked, and false when the state is NotTracked or Inferred. I.e. only the really tracked joints are considered valid.

How to build exe with the Kinect-v2 plugins provided by Microsoft

In case you’re targeting Kinect-v2 sensor only, and would like to avoid packing all native libraries that come with the K2-asset in the build, as well as unpacking them into the working directory of the executable afterwards, do as follows:

1. Download and unzip the Kinect-v2 Unity Plugins from here.

2. Open your Unity project. Select ‘Assets / Import Package / Custom Package’ from the menu and import only the Plugins-folder from ‘Kinect.2.0.1410.19000.unitypackage’. You can find it in the unzipped package from p.1 above. Please don’t import anything from the ‘Standard Assets’-folder of the unitypackage. All needed standard assets are already present in the K2-asset.

3. If you are using the FacetrackingManager in your scenes, import only the Plugins-folder from ‘Kinect.Face.2.0.1410.19000.unitypackage’ as well. If you are using visual gestures (i.e. VisualGestureManager in your scenes), import only the Plugins-folder from ‘Kinect.VisualGestureBuilder.2.0.1410.19000.unitypackage’, too. Again, please don’t import anything from the ‘Standard Assets’-folders of these unitypackages. All needed standard assets are already present in the K2-asset.

4. Delete KinectV2UnityAddin.x64.zip & NuiDatabase.zip (or all zipped libraries) from the K2Examples/Resources-folder. You can see them as .zip-files in the Assets-window, or as .zip.bytes-files in the Windows explorer. You are going to use the Kinect-v2 sensor only, so all these zipped libraries are not needed any more.

5. Delete all dlls in the root-folder of your Unity project. The root-folder is the parent-folder of the Assets-folder of your project, and is not visible in the Editor. You may need to stop the Unity editor. Delete the NuiDatabase- and vgbtechs-folders in the root-folder, as well. These dlls and folders are no more needed, because they are part of the project’s Plugins-folder now.

6. Open Unity editor again, load the project and try to run the demo scenes in the project, to make sure they work as expected.

7. If everything is OK, build the executable again. This should work for both x86 and x86_64-architectures, as well as for Windows-Store, SDK 8.1.

How to build Windows-Store (UWP-10) application

To do it, you need at least v2.12.2 of the K2-asset. Then follow these steps:

1. (optional, as of v2.14.1) Delete the KinectScripts/SharpZipLib-folder. It is not needed for UWP. If you leave it, it may cause syntax errors later.

2. Open ‘File / Build Settings’ in Unity editor, switch to ‘Windows store’ platform and select ‘Universal 10’ as SDK. Make sure ‘.Net’ is selected as scripting backend. Optionally enable the ‘Unity C# Project’ and ‘Development build’-settings, if you’d like to edit the Unity scripts in Visual studio later.

3. Press the ‘Build’-button, select output folder and wait for Unity to finish exporting the UWP-Visual studio solution.

4. Close or minimize the Unity editor, then open the exported UWP solution in Visual studio.

5. Select x86 or x64 as target platform in Visual studio.

6. Open ‘Package.appmanifest’ of the main project, and on tab ‘Capabilities’ enable ‘Microphone’ & ‘Webcam’. These may be enabled in the Windows-store’s Player settings in Unity, too.

7. If you have enabled the ‘Unity C# Project’-setting in p.2 above, right click on ‘Assembly-CSharp’-project in the Solution explorer, select ‘Properties’ from the context menu, and then select ‘Windows 10 Anniversary Edition (10.0; Build 14393)’ as ‘Target platform’. Otherwise you will get compilation errors.

8. Build and run the solution, on the local or remote machine. It should work now.

Please mind the FacetrackingManager and SpeechRecognitionManager-components, hence the scenes that use them, will not work with the current version of the K2-UWP interface.

How to run the projector-demo scene (v2.13 and later)

To run the KinectProjectorDemo-scene, you need to calibrate the projector to the Kinect sensor first. To do it, please follow these steps:

1. To do the needed sensor-projector calibration, you first need to download RoomAliveToolkit, and then open and build the ProCamCalibration-project in Microsoft Visual Studio 2015 or later. For your convenience, here is a ready-made build of the needed executables, made with VS-2015.

2. Then open the ProCamCalibration-page and follow carefully the instructions in ‘Tutorial: Calibrating One Camera and One Projector’, from ‘Room setup’ to ‘Inspect the results’.

3. After the ProCamCalibration finishes successfully, copy the generated calibration xml-file to the KinectDemos/ProjectorDemo/Resources-folder of the K2-asset.

4. Open the KinectProjectorDemo-scene in Unity editor, select the MainCamera-game object in Hierarchy, and drag the calibration xml-file generated by ProCamCalibrationTool to the ‘Calibration Xml’-setting of its ProjectorCamera-component. Please also check, if the value of ‘Proj name in config’-setting is the same as the projector name set in the calibration xml-file (usually ‘0’).

5. Set the projector to duplicate the main screen, enable ‘Maximize on play’ in Editor (or build the scene), and run the scene in full-screen mode. Walk in front of the sensor, to check if the projected skeleton overlays correctly the user’s body. You can also try to enable ‘U_Character’ game object in the scene, to see how a virtual 3D-model can overlay the user’s body at runtime.

How to render background and the background-removal image on the scene background

First off, if you want to replace the color-camera background in the FittingRoom-demo scene with the background-removal image, please see and follow these steps.

For all other demo-scenes: You can replace the color-camera image on scene background with the background-removal image, by following these (rather complex) steps:

1. Create an empty game object in the scene, name it BackgroundImage1, and add ‘GUI Texture’-component to it (this will change after the release of Unity 2017.2, because it deprecates GUI-Textures). Set its Transform position to (0.5, 0.5, 0) to center it on the screen. This object will be used to render the scene background, so you can select a suitable picture for the Texture-setting of its GUITexture-component. If you leave its Texture-setting to None, a skybox or solid color will be rendered as scene background.

2. In a similar way, create a BackgroundImage2-game object. This object will be used to render the detected users, so leave the Texture-setting of its GUITexture-component to None (it will be set at runtime by a script), and set the Y-scale of the object to -1. This is needed to flip the rendered texture vertically. The reason: Unity textures are rendered bottom to top, while the Kinect images are top to bottom.

3. Add KinectScripts/BackgroundRemovalManager-script as component to the KinectController-game object in the scene (if it is not there yet). This is needed to provide the background removal functionality to the scene.

4. Add KinectDemos/BackgroundRemovalDemo/Scripts/ForegroundToImage-script as component to the BackgroundImage2-game object. This component will set the foreground texture, created at runtime by the BackgroundRemovalManager-component, as Texture of the GUI-Texture component (see p2 above).

Now the tricky part: Two more cameras are needed to display the user image over the scene background – one to render the background picture, 2nd one to render the user image on top of it, and finally – the main camera – to render the 3D objects on top of the background cameras. Cameras in Unity have a setting called ‘Culling Mask’, where you can set the layers rendered by each camera. There are also two more settings: Depth and ‘Clear flags’ that may be used to change the cameras rendering order.

5. In our case, two extra layers will be needed for the correct rendering of background cameras. Select ‘Add layer’ from the Layer-dropdown in the top-right corner of the Inspector and add 2 layers – ‘BackgroundLayer1’ and ‘BackgroundLayer2’, as shown below. Unfortunately, when Unity exports the K2-package, it doesn’t export the extra layers too. That’s why the extra layers are missing in the demo-scenes.

6. After you have added the extra layers, select the BackgroundImage1-object in Hierarchy and set its layer to ‘BackgroundLayer1’. Then select the BackgroundImage2 and set its layer to ‘BackgroundLayer2’.

7. Create a camera-object in the scene and name it BackgroundCamera1. Set its CullingMask to ‘BackgroundLayer1’ only. Then set its ‘Depth’-setting to (-2) and its ‘Clear flags’-setting to ‘Skybox’ or ‘Solid color’. This means this camera will render first, will clear the output and then render the texture of BackgroundImage1. Don’t forget to disable its AudioListener-component, too. Otherwise, expect endless warnings in the console, regarding multiple audio listeners in the scene.

8. Create a 2nd camera-object and name it BackgroundCamera2. Set its CullingMask to ‘BackgroundLayer2’ only, its ‘Depth’ to (-1) and its ‘Clear flags’ to ‘Depth only’. This means this camera will render 2nd (because -1 > -2), will not clear the previous camera rendering, but instead render the BackgroundImage2 texture on top of it. Again, don’t forget to disable its AudioListener-component.

9. Finally, select the ‘Main Camera’ in the scene. Set its ‘Depth’ to 0 and ‘Clear flags’ to ‘Depth only’. In its ‘Culling mask’ disable ‘BackgroundLayer1’ and ‘BackgroundLayer2’, because they are already rendered by the background cameras. This way the main camera will render all other layers in the scene, on top of the background cameras (depth: 0 > -1 > -2).

If you need a practical example of the above setup, please look at the objects, layers and cameras of the KinectDemos/BackgroundRemovalDemo/KinectBackgroundRemoval1-demo scene.

How to run the demo scenes on non-Windows platforms

Starting with v2.14 of the K2-asset you can run and build many of the demo-scenes on non-Windows platform. In this case you can utilize the KinectDataServer and KinectDataClient components, to transfer the Kinect body and interaction data over the network. The same approach is used by the K2VR-asset. Here is what to do:

1. Add KinectScripts/KinectDataClient.cs as component to KinectController-game object in the client scene. It will replace the direct connection to the sensor with connection to the KinectDataServer-app over the network.

2. On the machine, where the Kinect-sensor is connected, run KinectDemos/KinectDataServer/KinectDataServer-scene or download the ready-built KinectDataServer-app for the same version of Unity editor, as the one running the client scene. The ready-built KinectDataServer-app can be found on this page.

3. Make sure the KinectDataServer and the client scene run in the same subnet. This is needed, if you’d like the client to discover automatically the running instance of KinectDataServer. Otherwise you would need to set manually the ‘Server host’ and ‘Server port’-settings of the KinectDataClient-component.

4. Run the client scene to make sure it connects to the server. If it doesn’t, check the console for error messages.

5. If the connection between the client and server is OK, and the client scene works as expected, build it for the target platform and test it there too.

How to workaround the user tracking issue, when the user is turned back

Starting with v2.14 of the K2-asset you can (at least roughly) work around the user tracking issue, when the user is turned back. Here is what to do:

1. Add FacetrackingManager-component to your scene, if there isn’t one there already. The face-tracking is needed for front & back user detection.

2. Enable the ‘Allow turn arounds’-setting of KinectManager. The KinectManager is component of KinectController-game object in all demo scenes.

3. Run the scene to test it. Keep in mind this feature is only a workaround (not a solution) for an issue in Kinect SDK. The issue is that by design Kinect tracks correctly only users who face the sensor. The side tracking is not smooth, as well. And finally, this workaround is experimental and may not work in all cases.

How to get the full scene depth image as texture

If you’d like to get the full scene depth image, instead of user-only depth image, please follow these steps:

1. Open Resources/DepthShader.shader and uncomment the commented out else-part of the ‘if’, you can see near the end of the shader. Save the shader and go back to the Unity editor.

2. Make sure the ‘Compute user map’-setting of the KinectManager is set to ‘User texture’. KinectManager is component of the KinectController-game object in all demo scenes.

3. Optionally enable the ‘Display user map’-setting of KinectManager, if you want to see the depth texture on screen.

4. You can also get the depth texture by calling ‘KinectManager.Instance.GetUsersLblTex()’ in your scripts, and then use it the way you want.

Some useful hints regarding AvatarController and AvatarScaler

The AvatarController-component moves the joints of the humanoid model it is attached to, according to the user’s movements in front of the Kinect-sensor. The AvatarScaler-component (used mainly in the fitting-room scenes) scales the model to match the user in means of height, arms length, etc. Here are some useful hints regarding these components:

1. If you need the avatar to move around its initial position, make sure the ‘Pos relative to camera’-setting of its AvatarController is set to ‘None’.

2. If ‘Pos relative to camera’ references a camera instead, the avatar’s position with respect to that camera will be the same as the user’s position with respect to the Kinect sensor.

3. If ‘Pos relative to camera’ references a camera and ‘Pos rel overlay color’-setting is enabled too, the 3d position of avatar is adjusted to overlay the user on color camera feed.

4. In this last case, if the model has AvatarScaler component too, you should set the ‘Foreground camera’-setting of AvatarScaler to the same camera. Then scaling calculations will be based on the adjusted (overlayed) joint positions, instead of on the joint positions in space.

5. The ‘Continuous scaling’-setting of AvatarScaler determines whether the model scaling should take place only once when the user is detected (when the setting is disabled), or continuously – on each update (when the setting is enabled).

If you need the avatar to obey physics and gravity, disable the ‘Vertical movement’-setting of the AvatarController-component. Disable the ‘Grounded feet’-setting too, if it is enabled. Then enable the ‘Freeze rotation’-setting of its Rigidbody-component for all axes (X, Y & Z). Make sure the ‘Is Kinematic’-setting is disabled as well, to make the physics control the avatar’s rigid body.

If you want to stop the sensor control of the humanoid model in the scene, you can remove the AvatarController-component of the model. If you want to resume the sensor control of the model, add the AvatarController-component to the humanoid model again. After you remove or add this component, don’t forget to call ‘KinectManager.Instance.refreshAvatarControllers();’, to update the list of avatars KinectManager keeps track of.

How to setup the K2-package (v2.16 or later) to work with Orbbec Astra sensors (deprecated – use Nuitrack)

1. Go to https://orbbec3d.com/develop/ and click on ‘Download Astra Driver and OpenNI 2’. Here is the shortcut: http://www.orbbec3d.net/Tools_SDK_OpenNI/3-Windows.zip

2. Unzip the downloaded file, go to ‘Sensor Driver’-folder and run SensorDriver_V4.3.0.4.exe to install the Orbbec Astra driver.

3. Connect the Orbbec Astra sensor. If the driver is installed correctly, you should see it in the Device Manager, under ‘Orbbec’.

4. If you have Kinect SDK 2.0 installed, please open KinectScripts/Interfaces/Kinect2Interface.cs and change ‘sensorAlwaysAvailable = true;’ at the beginning of the class to ‘sensorAlwaysAvailable = false;’. More information about this action can be found here.

5. If you have ‘Kinect SDK 2.0’ installed on the same machine, look at this tip above, to see how to turn off the K2-sensor-always-available flag.

6. Run one of the avatar-demo scenes to check, if the Orbbec Astra interface works. The sensor should light up and the user(s) should be detected.

How to setup the K2-asset (v2.17 or later) to work with Nuitrack body tracking SDK (updated 11.Jun.2018)

1. To install Nuitrack SDK, follow the instructions on this page, for your respective platform. Nuitrack installation archives can be found here.

2. Connect the sensor, go to [NUITRACK_HOME]/activation_tool-folder and run the Nuitrack-executable. Press the Test-button at the top. You should see the depth stream coming from the sensor. And if you move in front of the sensor, you should see how Nuitrack SDK tracks your body and joints.

3. If you can’t see the depth image and body tracking, when the sensor connected, this would mean Nuitrack SDK is not working properly. Close the Nuitrack-executable, go to [NUITRACK_HOME]/bin/OpenNI2/Drivers and delete (or move somewhere else) the SenDuck-driver and its ini-file. Then go back to step 2 above, and try again.

4. If you have ‘Kinect SDK 2.0‘ installed on the same machine, look at this tip, to see how to turn off the K2-sensor always-available flag.

5. Please mind, you can expect crashes, while using Nuitrack SDK with Unity. The two most common crash-causes are: a) the sensor is not connected when you start the scene; b) you’re using Nuitrack trial version, which will stop after 3 minutes of scene run, and will cause Unity crash as side effect.

6. If you buy a Nuitrack license, don’t forget to import it into Nuitrack’s activation tool. On Windows this is: <nuitrack-home>\activation_tool\Nuitrack.exe. You can use the same app to test the currently connected sensor, as well. If everything works, you are ready to test the Nuitrack interface in Unity..

7. Run one of the avatar-demo scenes to check, if the Nuitrack interface, the sensor depth stream and Nuitrack body tracking works. Run the color-collider demo scene, to check if the color stream works, as well.

8. Please mind: The scenes that rely on color image overlays may or may not work correctly. This may be fixed in future K2-asset updates.

How to control Keijiro’s Skinner-avatars with the Avatar-Controller component

1. Download Keijiro’s Skinner project from its GitHub-repository.

2. Import the K2-asset from Unity asset store into the same project. Delete K2Examples/KinectDemos-folder. The demo scenes are not needed here.

3. Open Assets/Test/Test-scene. Disable Neo-game object in Hierarchy. It is not really needed.

4. Create an empty game object in Hierarchy and name it KinectController, to be consistent with the other demo scenes. Add K2Examples/KinectScripts/KinectManager.cs as component to this object. The KinectManager-component is needed by all other Kinect-related components.

5. Select ‘Neo (Skinner Source)’-game object in Hierarchy. Delete ‘Mocaps’ from the Controller-setting of its Animator-component, to prevent playing the recorded mo-cap animation, when the scene starts.

6. Press ‘Select’ below the object’s name, to find model’s asset in the project. Disable ‘Optimize game objects’-setting on its Rig-tab, and make sure its rig is Humanoid. Otherwise the AvatarController will not find the model’s joints it needs to control.

7. Add K2Examples/KinectScripts/AvatarController-component to ‘Neo (Skinner Source)’-game object in the scene, and enable its ‘Mirrored movement’ and ‘Vertical movement’-settings. Make sure the object’s transform rotation is (0, 180, 0).

8. Optionally, disable the script components of ‘Camera tracker’, ‘Rotation’, ‘Distance’ & ‘Shake’-parent game objects of the main camera in the scene, if you’d like to prevent the camera’s own animated movements.

9. Run the scene and start moving in front of the sensor, to see the effect. Try the other skinner renderers as well. They are children of ‘Skinner Renderers’-game object in the scene.

How to track a ball hitting a wall (hints)

This is a question I was asked quite a lot recently, because there are many possibilities for interactive playgrounds out there. For instance: virtual football or basketball shooting, kids throwing balls at projected animals on the wall, people stepping on virtual floor, etc. Here are some hints how to achieve it:

1. The only thing you need in this case, is to process the raw depth image coming from the sensor. You can get it by calling KinectManager.Instance.GetRawDepthMap(). It is an array of short-integers (DepthW x DepthH in size), representing the distance to the detected objects for each point of the depth image, in mm.

2. You know the distance from the sensor to the wall in meters, hence in mm too. It is a constant, so you can filter out all depth points that represent object distances closer than 1-2 meters (or less) from the wall. They are of no interest here, because too far from the wall. You need to experiment a bit to find the exact filtering distance.

3. Use some CV algorithm to locate the centers of the blobs of remaining, unfiltered depth points. There may be only one blob in case of one ball, or many blobs in case of many balls, or people walking on the floor.

4. When these blobs (and their respective centers) is at maximum distance, close to the fixed distance to the wall, this would mean the ball(s) have hit the wall.

5. Map the depth coordinates of the blob centers to color camera coordinates, by using KinectManager.Instance.MapDepthPointToColorCoords(), and you will have the screen point of impact, or KinectManager.Instance.MapDepthPointToSpaceCoords(), if you prefer to get the 3D position of the ball at the moment of impact. If you are not sure how to do the sensor to projector calibration, look at this tip.

How to create your own programmatic gestures

The programmatic gestures are implemented in KinectScripts/KinectGestures.cs or class that extends it. The detection of a gesture consists of checking for gesture-specific poses in the different gesture states. Look below for more information.

1. Open KinectScripts/KinectGestures.cs and add the name of your gesture(s) to the Gestures-enum. As you probably know, the enums in C# cannot be extended. This is the reason you should modify it to add your unique gesture names here. Alternatively, you can use the predefined UserGestureX-names for your gestures, if you prefer not to modify KinectGestures.cs.

2. Find CheckForGesture()-method in the opened class and add case(s) for the new gesture(s), at the end of its internal switch. It will contain the code that detects the gesture.

3. In the gesture-case, add an internal switch that will check for user pose in the respective state. See the code of other simple gesture (like RaiseLeftHand, RaiseRightHand, SwipeLeft or SwipeRight), if you need example.

In CheckForGestures() you have access to the jointsPos-array, containing all joint positions and jointsTracked-array, containing showing whether the the respective joints are currently tracked or not. The joint positions are in world coordinates, in meters.

The gesture detection code usually consists of checking for specific user poses in the current gesture state. The gesture detection always starts with the initial state 0. At this state you should check if the gesture has started. For instance, if the tracked joint (hand, foot or knee) is positioned properly relative to some other joint (like body center, hip or shoulder). If it is, this means the gesture has started. Save the position of the tracked joint, the current time and increment the state to 1. All this may be done by calling SetGestureJoint().

Then, at the next state (1), you should check if the gesture continues successfully or not. For instance, if the tracked joint has moved as expected relative to the other joint, and within the expected time frame in seconds. If it’s not, cancel the gesture and start over from state 0. This could be done by calling SetGestureCancelled()-method.

Otherwise, if this is the last expected state, consider the gesture is completed, call CheckPoseComplete() with last parameter 0 (i.e. don’t wait), to mark the gesture as complete. In case of gesture cancelation or completion, the gesture listeners will be notified.

If the gesture is successful so far, but not yet completed, call SetGestureJoint() again to save the current joint position and timestamp, as well as increment the gesture state again. Then go on with the next gesture-state processing, until the gesture gets completed. It would be also good to set the progress of the gesture the gestureData-structure, when the gesture consists of more than two states.

The demo scenes related to checking for programmatic gestures are located in the KinectDemos/GesturesDemo-folder. The KinectGesturesDemo1-scene shows how to utilize discrete gestures, and the KinectGesturesDemo2-scene is about the continuous gestures.

More tips regarding listening for discrete and continuous programmatic gestures in Unity scenes can be found above.

What is the file-format used by the KinectRecorderPlayer-component (KinectRecorderDemo)

The KinectRecorderPlayer-component can record or replay body-recording files. These are text files, where each line represents a body-frame at a specific moment in time. You can use it to replay or analyze the body-frame recordings in your own tools. Here is the format of each line. See the sample body-frames below, for reference.

0. time in seconds, since the start of recording, followed by ‘|’. All other field separators are ‘,’.

This value is used by the KinectRecorderPlayer-component for time-sync, when it needs to replay the body recording.

1. body frame identifier. should be ‘kb’.

2. body-frame timestamp, coming from the Kinect SDK (ignored by the KinectManager)

3. number of max tracked bodies (6).

4. number of max tracked body joints (25).

then, follows the data for each body (6 times)

6. body tracking flag – 1 if the body is tracked, 0 if it is not tracked (the 5 zeros at the end of the lines below are for the 5 missing bodies)

if the body is tracked, then the bodyId and the data for all body joints follow. if it is not tracked – the bodyId and joint data (7-9) are skipped

7. body ID

body joint data (25 times, for all body joints – ordered by JointType (see KinectScripts/KinectInterop.cs)

8. joint tracking state – 0 means not-tracked; 1 – inferred; 2 – tracked

if the joint is inferred or tracked, the joint position data follows. if it is not-tracked, the joint position data (9) is skipped.

9. joint position data – X, Y & Z.

And here are two body-frame samples, for reference:

”

0.774|kb,101856122898130000,6,25,1,72057594037928806,2,-0.415,-0.351,1.922,2,-0.453,-0.058,1.971,2,-0.488,0.223,2.008,2,-0.450,0.342,2.032,2,-0.548,0.115,1.886,1,-0.555,-0.047,1.747,1,-0.374,-0.104,1.760,1,-0.364,-0.105,1.828,2,-0.330,0.103,2.065,2,-0.262,-0.100,1.963,2,-0.363,-0.068,1.798,1,-0.416,-0.078,1.789,2,-0.457,-0.334,1.847,2,-0.478,-0.757,1.915,2,-0.467,-1.048,1.943,2,-0.365,-1.043,1.839,2,-0.361,-0.356,1.929,2,-0.402,-0.663,1.795,1,-0.294,-1.098,1.806,1,-0.218,-1.081,1.710,2,-0.480,0.154,2.001,2,-0.335,-0.109,1.840,2,-0.338,-0.062,1.804,2,-0.450,-0.067,1.736,2,-0.435,-0.031,1.800,0,0,0,0,0

1.710|kb,101856132898750000,6,25,1,72057594037928806,2,-0.416,-0.351,1.922,2,-0.453,-0.059,1.972,2,-0.487,0.223,2.008,2,-0.449,0.342,2.032,2,-0.542,0.116,1.881,1,-0.555,-0.047,1.748,1,-0.374,-0.102,1.760,1,-0.364,-0.104,1.826,2,-0.327,0.102,2.063,2,-0.262,-0.100,1.963,2,-0.363,-0.065,1.799,2,-0.415,-0.071,1.785,2,-0.458,-0.334,1.848,2,-0.477,-0.757,1.914,1,-0.483,-1.116,2.008,1,-0.406,-1.127,1.917,2,-0.361,-0.356,1.928,2,-0.402,-0.670,1.796,1,-0.295,-1.100,1.805,1,-0.218,-1.083,1.710,2,-0.480,0.154,2.001,2,-0.334,-0.106,1.840,2,-0.339,-0.061,1.799,2,-0.453,-0.062,1.731,2,-0.435,-0.020,1.798,0,0,0,0,0

”

How to enable user gender and age detection in KinectFittingRoom1-demo scene

You can utilize the cloud face detection in KinectFittingRoom1-demo scene, if you’d like to detect the user’s gender and age, and properly adjust the model categories for him or her. The CloudFaceDetector-component uses Azure Cognitive Services for user-face detection and analysis. These services are free of charge, if you don’t exceed a certain limit (30000 requests per month and 20 per minute). Here is how to do it:

1. Go to this page and press the big blue ‘Get API Key’-button next to ‘Face API’. See this screenshot, if you need more details.

2. You will be asked to sign-in with your Microsoft account and select your Azure subscription. At the end you should land on this page.

3. Press the ‘Create a resource’-button at the upper left part of the dashboard, then select ‘AI + Machine Learning’ and then ‘Face’. You need to give the Face-service a name & resource group and select endpoint (server address) near you. Select the free payment tier, if you don’t plan a bulk of requests. Then create the service. See this screenshot, if you need more details.

4. After the Face-service is created and deployed, select ‘All resources’ at the left side of the dashboard, then the name of the created Face-serice from the list of services. Then select ‘Quick start’ from the service menu, if not already selected. Once you are there, press the ‘Keys’-link and copy one of the provided subscription keys. Don’t forget to write down the first part of the endpoint address, as well. These parameters will be needed in the next step. See this screenshot, if you need more details.

5. Go back to Unity, open KinectFittingRoom1-demo scene and select the CloudFaceController-game object in Hierarchy. Type in the (written down in 4. above) first part of the endpoint address to ‘Face service location’, and paste the copied subscription key to ‘Face subscription key’. See this screenshot, if you need more details.

6. Finally, select the KinectController-game object in Hierarchy, find its ‘Category Selector’-component and enable the ‘Detect gender age’-setting. See this screenshot, if you need more details.

7. That’s it. Save the scene and run it to try it out. Now, after the T-pose detection, the user’s face will be analyzed and you will get information regarding the user’s gender and age at the lower left part of the screen.

8. If everything is OK, you can setup the model selectors in the scene to be available only for users with specific gender and age range, as needed. See the ‘Model gender’, ‘Minimum age’ and ‘Maximum age’-settings of the available ModelSelector-components.

Hi, I tried to load a avatar prefab (with AvatarController and AvatarScaler or adding them later) to the scene when running, but the model wouldn’t change its position according to the user(the scripts didn’t work) although it was in the Hierarchy and set Active. However, after the user is not tracked first and then tracked again, it works. So, can I let the scripts work just after adding the model to the scene? Thanks in advance!

Hi there,

If you create the avatars dynamically, please use the code below after instantiating the avatar(s). It is from LocateAvatarsAndGestureListeners.cs-script:

”

KinectManager manager = KinectManager.Instance;manager.avatarControllers.Clear();

manager.ClearKinectUsers();

MonoBehaviour[] monoScripts = FindObjectsOfType(typeof(MonoBehaviour)) as MonoBehaviour[];

foreach(MonoBehaviour monoScript in monoScripts)

{

if((monoScript is AvatarController) && monoScript.enabled)

{

AvatarController avatar = (AvatarController)monoScript;

manager.avatarControllers.Add(avatar);

}

}

“

hi, when i try my unity say 17 error whose : “The type or namespace name `Kinect’ does not exist in the namespace `Microsoft’. Are you missing an assembly reference? ” , i install sdk from microsoft,i read all pages but it doesnt work. i use kinect V2

Just import the K2-asset into new Unity project, open one of the demo scenes and run it. You need to have Kinect SDK 2.0 installed, as well.

Hi Rumen. I have an issue for loading new models and category into Resources folder dynamically. This dynamic means with no build and run again the project to recognize. For instance, the model data can be queried from pre-stored place like database. Do I need every time to perform rigging, avatar instantiate and others for every new model and category update in Resource folder?

Loading assets dynamically is beyond the scope of the K2-asset, but I suppose you could use asset bundles to do it. Here is more info: https://docs.unity3d.com/Manual/AssetBundlesIntro.html By all means, you should make sure each new model has the needed rig, and probably modify the ModelSelector component a bit, in order to load the models from asset bundles instead of from resources.

Floow to the color displaying issue, the player settings has switched to Gamma already.

Even if I disabled the UserBodyBleder-component, the problem still exists. However, as I am running in portrait mode, if I disable the UserBodyBleder-component, The clothing model cannot fit into the body.

Hm, the portrait mode should work with or without the body blender.

If i need to build my own model (Avatar) in 3D how can i put the skeleton for animate my model with Kinect? Thank you

What you need is just a standard humanoid rigging for the model that (as far as I know) could be done with Maya or 3dsMax. Many customers use Mixamo humanoid rigs, as well. But, as I said multiple times, I’m not a model designer and just use ready-made models in my demo scenes.

Could you please provide me an example for dynamically update the Models at run time with the AssetBundle as you suggested. We can consider the new models with existing ones for example (Resources \ Clothing \0000 and 0001).

Such an example would require some research and coding efforts. I wouldn’t do it for free, and also don’t have the needed free time at the moment.

hi rumen please me to I can change my contact with @Vistana because we’re working on the same problem so that we can help each other.

Hi Rumen I have just bought the your K2 samples from unity stores. Thats helps me alot

Basically I was trying to upload a pant model its uploaded correctly but “PANT” is not showing at run time I mean everything is done like wise you have said in the description in “KINECT V2 TIPS AND TRICKS”.

where I’m doing the mistake I don’t know?

if you’ll just make a short video for uploading a model then it’ll be very helpful for us.

My other question is that.

if we are going to use the high poly cloth model is that possible that we can minimize the distance of cloth and body ? I mean to said that can we increase our accuracy to this level in the following video youtube link after increasing the polygons of cloth models like in this video! In the following link———> https://www.youtube.com/watch?v=Mr71jrkzWq8

Hi, as you probably know, I don’t do any videos. This is because I don’t have experience in making videos and because of missing time. Coding, updating the packages and responding to customer requests eats the major portion of my time.

The procedure of adding new models is exactly as described here: https://rfilkov.com/2015/01/25/kinect-v2-tips-tricks-examples/#t12 It was tested many times already. Please check again, if you have a separate folder, number and ModelSelector for ‘Pants’. If you still can’t locate the issue, please zip your project (along with ‘Pants’) and send it over to me via WeTransfer. Then I’ll take a look at it, to find out what exactly went wrong. Don’t forget to mention your invoice number, as well. This is to prove your eligibility for support.

Regarding the distance between the body and the model: There is a setting of the UserBodyBlender-component of MainCamera, called ‘Depth threshold’. You can experiment with it. According to my experience, when the value is too low and the model surface is curved because of some joint orientations, it may happen that user body penetrates the model, and this may look weird. That’s why the girl in the video barely moves, as to me.

Hi Rumen voice recognition is not working in K2 of assets, I have follow all the steps according to your description that you have given in the TIPS AND TRICKS

HI Rumen thanks for the response I have overcome the issue related to uploading model.

Is that possible that we change the calibration Pose mode of the user in the fitting! please let me about the reference from where it can be change BY DEFAULT it is the T-POSE I have tried but can’t find it. From where it can be change?

Other thing is that the interaction system of hands is not working properly after making the scene orientation of fitting room in portrait mode. Is that possible that we can change interaction logic by using the “Mirsosoft.Kinect.dll ” in this program ? if it is not possible then how can we improve the interaction system in the fitting room and I don’t want to operate the fitting room or change the models with the gestures. Please let me know how can I do it in more better way.

Here is the my invoice image link and number https://drive.google.com/open?id=1QNd6qD7XEO9Y5nQ36wJUSuEf9GBzw8YMuJNdCBDEzaE 18966678920773

The calibration pose is a setting of KinectManager-component in the scene. It is called ‘Player calibration pose’. You can change it to something else, or set it to ‘None’, as in the FR-demo2.

The interaction system works quite well in the portrait mode, according to my tests. See here how to properly set the portrait mode: https://rfilkov.com/2015/01/25/kinect-v2-tips-tricks-examples/#t19

To turn off the interaction system, disable the InteractionManager-component of KinectController-game object, and the InteractionInputModule-component of EventSystem-game object in the scene. To turn off the gesture recognition, disable ‘Swipe to change models’ & ‘Raise hand to change categories’-settings of the CategorySelector-component of KinectController. Feel free to replace it with the interaction system that fits best your needs.

Hi Rumen,

I’m having an issue when I’m switching scenes between a scene that tracks a user’s hand movements with a real life camera view and a scene that displays the game view while tracking a user’s full body movements and vice versa. For example, in my menu screen the user can see himself on the screen and can move his hand to a position to move into the first level. Then in the first level the Kinect does not track the user’s movements. This happens in the reverse as well. If the user starts in the level the Kinect picks up his movements and the avatar moves with him, but when he dies and moves onto the end scene, the Kinect does not display him in real life, and instead just shows a white screen, but his movements are still being tracked.

Hi, do you follow the instructions in ‘Howto-Use-KinectManager-Across-Multiple-Scenes.pdf’ in the _Readme-folder of the K2-asset? They are illustrated by the demo scenes, located in KinectDemos/MultiSceneDemo-folder, as well.

Hi Rumen, I need to change add my own photo in OverlayDemo> Sprites folder to use for the source image in photo button section in Fitting Room demo. I made folder like Superman and other folders and files located on Sprites folder, but the added folder with containing png file was not added to the Image (component) > Source Image. Please guide me how can I add my own for this purpose.

I’m not sure I understand your issue. You can set the photo-button image directly in the PhotoBtn/Image-component (as you did, I think). What’s the problem with that?

Keep in mind that before that your image asset needs to have its ‘Texture type’ set as ‘Sprite (2D and UI)’. That’s all.

Hi Rumen, first of all, thanks for all your replies. I have question about InteractionInputModule and InteractionManager components. Firstly, how add or change mouse hover when mouse move or hover on an item for example on Dressing Menu Items in KinectFittingRoom1. Secondly, how improve grip and release process when select an item. Because it has mouse jumping when click on an item.